We didn't set out to build our own LLM infrastructure layer. Like most teams in early 2024, we started with what was available off the shelf — the Vercel AI SDK looked like the obvious choice. A clean React hook, built-in streaming, multi-provider support. Ship fast, iterate later.

That worked for about two months.

Then our users started having real conversations. Multi-step analysis across ad platforms. Reports that pulled from Google Ads, Facebook, and Shopify in a single thread. Conversations that ran 30+ messages deep with data-heavy tool results filling up the context window.

The SDK was designed for chatbots. We were building an agent. And the gap between those two things turned out to be enormous.

The Starting Point

The initial setup was textbook. Frontend used the useChat hook from @ai-sdk/react. Backend used streamText from the ai package. Pick a provider, pass your messages, stream the response. Done.

The SDK handled a lot for us:

Streaming protocol between server and client

Message state management on the frontend

Basic tool calling with

maxStepsProvider abstraction (swap Anthropic for OpenAI by changing one line)

For a proof of concept, this was great. Users could ask questions, get streamed responses, and even trigger basic tool calls. We shipped it, got early feedback, and started building the real product on top of it.

Where It Broke Down

The problems didn't come all at once. They trickled in as we built more sophisticated features, each one revealing a limitation we hadn't anticipated.

Problem 1: Limited Metadata Support

Our frontend needed to send more than just the message text — which ad accounts were selected, which model the user preferred, whether certain tools were enabled, feature flags, API credentials. The SDK doesn't have a first-class way to send structured metadata alongside messages. The only escape hatch was experimental_prepareRequestBody — explicitly marked as experimental, with no stability guarantees.

On the server-to-client side, it was worse. The only way to send metadata back during streaming was through a generic, untyped data channel. We ended up stuffing everything into response headers and parsing them manually.

Problem 2: The Tool Calling Wall

This was the big one.

The SDK's approach to tool calling is maxSteps — you tell it the maximum number of tool-call rounds, and it loops automatically. For simple cases like "what's the weather?", this works fine.

For what we were building, it was fundamentally insufficient:

No mid-loop intervention. After a few tool calls returning large datasets, the context window would be nearly full. We needed to pause between steps, check the token budget, and potentially summarize.

maxStepsprovides no hooks between iterations.No dynamic tool loading. Not all tools are known upfront. Our agent has a meta-tool that discovers and loads new tools mid-conversation.

maxStepstakes a static tools object at the start and doesn't support mutation.No custom execution. Our tools aren't local functions — they're MCP (Model Context Protocol) tools running on remote servers via JSON-RPC. The SDK's tool execution model expects a synchronous

executefunction.No incremental persistence. We needed to save every thinking block, text chunk, and tool result to the database as it streamed — not after the entire conversation completed.

No conditional termination. Some tools signal that the conversation should stop (e.g., a report has been generated).

maxStepsonly stops when the LLM saysend_turnor you hit the step limit.

Problem 3: Provider Features Were Hidden

The SDK's provider abstraction was its selling point — swap providers with a one-line change. But that abstraction hides the features that make each provider useful in production.

For Anthropic:

Prompt caching requires annotating specific content blocks. Not exposed.

Extended thinking requires beta headers. Not exposed.

1M token context window requires a separate beta flag. Not exposed.

Detailed token usage (cache hits vs. regular) gets reduced to just

promptTokensandcompletionTokens.

For Gemini:

Implicit caching works differently from Anthropic's explicit approach. The SDK normalizes both away.

Thought signatures require preserving opaque tokens between turns. The SDK's message transformation strips these.

Problem 4: Costs Were Spiraling

Without prompt caching, every turn resent the entire system prompt and tool definitions at full price. For a 20K-token prefix on every turn of a 10-turn conversation, that's 200K wasted tokens. At production scale, unsustainable.

The Decision

We had a choice: keep patching around the SDK's limitations, or take control of the server-side pipeline entirely.

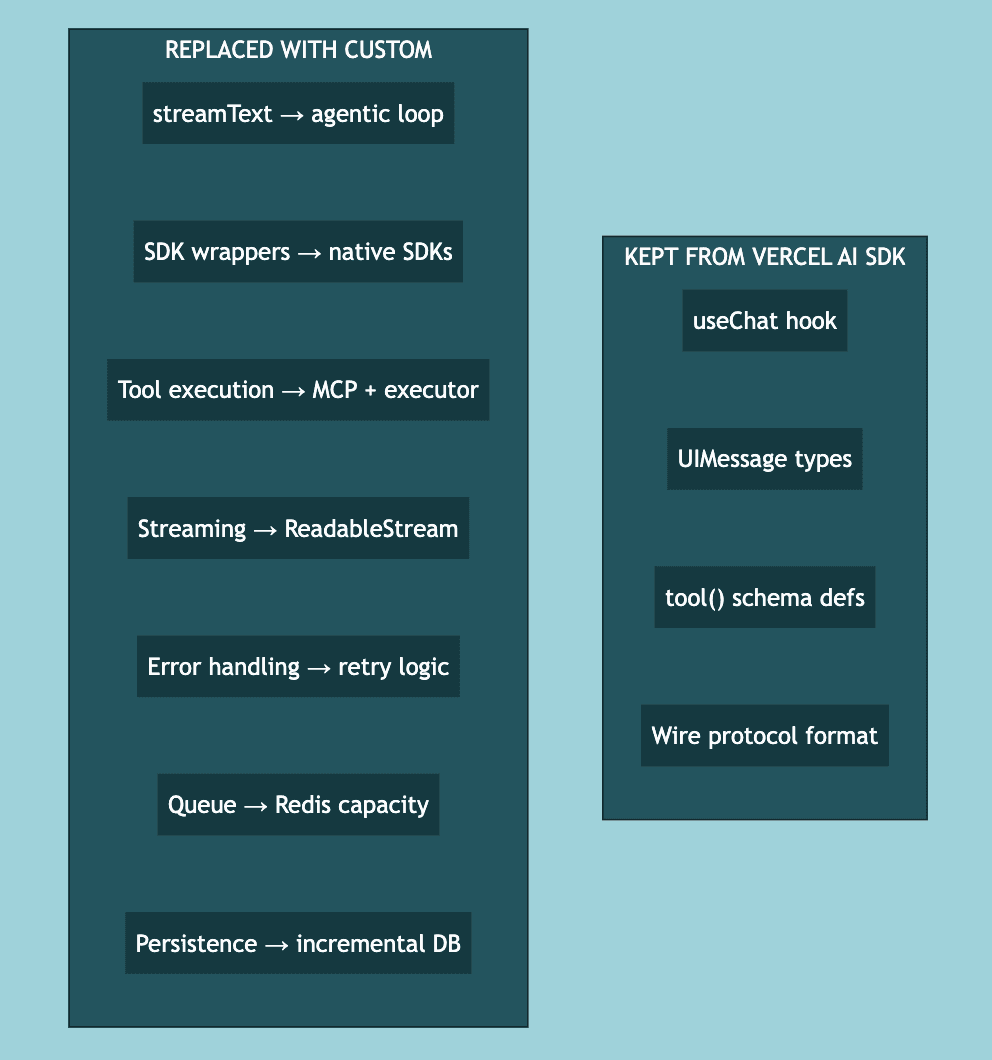

We chose the latter, with one important constraint: keep useChat on the frontend.

The React hook was good. It handled message state, input management, streaming display, loading indicators. Reimplementing all of that would have been wasteful. But we needed full control of what happens between the API route receiving a request and the response streaming back.

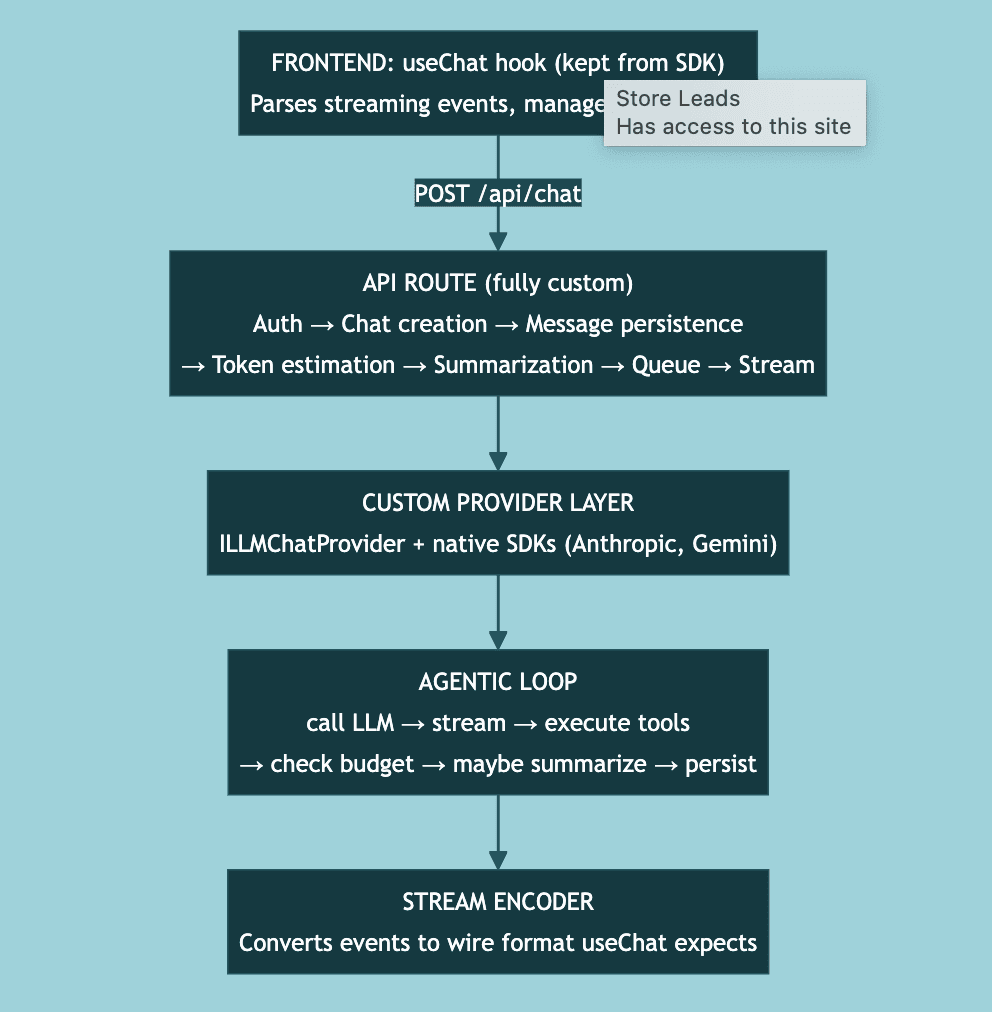

The High-Level Architecture

Here's what we built, at a high level:

The key insight: the wire protocol between server and client is stable and documented. We can encode it ourselves. That lets us keep the frontend hook while completely replacing the backend.

Was It Worth It?

Absolutely — but it wasn't free.

The custom engine is roughly 3,000 lines of code across the provider layer, the agentic loop, the stream encoder, and the queue system. That's code we maintain, test, and debug ourselves.

The payoff is control. We can cache prompts and save 60%+ on costs. We can summarize mid-conversation and keep long threads working. We can load tools dynamically. We can track token usage down to the cache-hit level.

If you're building a simple chatbot, the Vercel AI SDK is great. Use it.

If you're building an agent — one that calls tools autonomously, manages context windows, optimizes costs, and handles production traffic — you'll likely end up here eventually. The question is just whether you plan for it upfront or discover it the hard way.

We discovered it the hard way.

Written by the GoMarble engineering team. We're building AI tools for performance marketers. If this kind of work interests you, we're hiring.